Preface

About this guide

Most of you have probably experienced the process of writing a user guide. It is a terribly challenging experience.

We struggled to try to figure out the best way to write our manuals for years. Sometimes you think no matter what you write, nobody is going to read it. But, sometimes, you do get feedback from others and you’re convinced even the smallest detail must be written down, because someone will find it or miss it.

Then there’s the writing style. When we started back in 2005, we created something closer to a reference guide. It was painful to write, and I’m sure it was painful to read too. But, we tried with general examples, with a professional and impersonal tone… that’s what we thought would work at the time.

Later, we found out from our own blogging experience that good examples and a personal writing tone worked better. It seems we humans are much better at extracting general cases from particular ones than vice versa. And, they are much easier to write too.

The thing is that, after almost one-and-a-half decades in the making, the original reference style still persisted. So, we finally decided to go for a complete rewrite of our end-user manuals, applying all the lessons we learned along the way.

I still love the old user manuals that came with computers in the 1980s. You know, they were not just about how to use the thing, but about really becoming a master. My first programming book was a user manual from my first Amstrad PCW 256. I miss these manuals. Now, you usually don’t even get one when you receive a new laptop. I’m not sure if I’d really appreciate getting a copy of some C/C#/Javascript/whatever programming manual anyway, but it is all about nostalgia.

Anyway, this guide is not just about Plastic SCM. It is about how to become a better software developer mastering version control. It somehow tries to bring back some nostalgia from the old manuals, mixed with the "don’t write a reference guide anymore" hard-learned lesson.

It is prescriptive and opinionated sometimes, which now I think is a good thing because we propose things, then it is your choice to apply them. If we simply leave everything as an exercise to the reader, if we stay independent, then there’s not much value for you to extract as a reader. You can agree or disagree with us, but both will be valuable takeaways.

For years, we thought it would be too pretentious to tell teams how they should use our tool and implement version control. But, as I said, we learned that you choose our product not only because of the binaries you download; but also to save hours of expert-seeking efforts and benefit from our own experience. We build a version control platform; we work with hundreds of teams around the world, we might be mistaken, but you can definitely benefit from our mistakes and wise choices.

This guide is our best attempt to put together all we know about version control and how to implement it, written in the most concise and personal way we know. We hope you enjoy it and find it helpful because that’s the only reason why we wrote it.

Welcome

Welcome to Plastic SCM!

If you’re reading this, chances are you’re interested in mastering version control and going beyond the most common tools and practices.

This guide will help you understand Plastic SCM, the underlying philosophy, and best practices it is built upon. It will also help you decide if it is the right tool for you or how to master it if you’ve already decided to use it.

Starting up

Let’s map a few concepts

Before we start, I’d like to compare a few concepts from Plastic SCM to other version control systems, so everything will be much easier for you to understand in the following sections.

| Plastic SCM | Git | Perforce | Comment |

|---|---|---|---|

Checkin |

Commit |

Submit |

To Checkin is to submit changes to the repository. |

Changeset |

Commit |

Changelist |

Each time you checkin, you create a new changeset. (In this book, We’ll use the abbreviation cset for changeset and csets for changesets.) |

Update |

Checkout |

Sync |

Download content to the local working copy. This happens, for instance, when you switch to a different branch. |

Checkout |

--- |

Edit |

When you checkout a file in Plastic, you’re telling Plastic you are going to modify the file. It is not mandatory and you can skip this step. But, if you are using locks (exclusive access to a file), then checkouts are mandatory. |

main |

master |

main |

A.k.a. trunk in Subversion. The main branch always exists in a repository when you create it. In Plastic, you can safely rename it if needed. |

Repository |

Repository |

Depot |

The place where all versions, branches, merges, etc. are stored. |

Workspace |

Working tree |

Workspace |

The directory where your project is downloaded to a local disk and you use to work. In Git, the repo/working copy are tightly joined (the repo is inside a .git directory in the working copy). In Plastic, you can have as many workspaces as you want for the same repo. |

Now you might be wondering: Why don’t you version control designers simply use the same consistent naming convention for everything? The explanation could be really long here, but… yes, it’s just to drive you crazy 😉

A perfect workflow

It is a good idea to start with a vision of what is achievable. And that’s precisely what I’m going to do. First, I will describe what we consider to be state of the art in development workflows, what we consider to be the perfect workflow.

Key steps

- Task

-

It all starts with a task in your issue tracker or project management system: Jira, Bugzilla, Mantis, Ontime, or your own homemade solution. The key is that everything you do in code has an associated task. It doesn’t matter whether it is a part of a new feature or a bugfix; create a task for it. If you are not practicing this already, start now. Believe me, entering a task is nothing once you and your team get used to it.

- Task branch

-

Next, you create a branch for the task. Yes, one branch for each task.

- Develop

-

You work on your task branch and make as many checkins as you need. In fact, we strongly recommend checking in very, very often, explaining each step in the comments. This way, you’ll be explaining each step to the reviewer, and it really pays off. Also, don’t forget to add tests for any new feature or bugfix.

- Code review

-

Once you mark your task as completed, it can be code reviewed by a fellow programmer. No excuses here, every single task needs a code review.

- Validation

-

There is an optional step we do internally: validation. A peer switches to the task branch and manually tests it. They don’t look for bugs (automated tests take care of that) but look into the

solution/fix/new-featurefrom a customer perspective. The tester ensures the solution makes sense. This phase probably doesn’t make sense for all teams, but we strongly recommend it when possible. - Automated testing and merge

-

Once the task is reviewed/validated, it will be automatically tested. (After being merged but before the merge to be checked in. More on that later). Only if the test suite passes the merge will it be confirmed. This means we avoid breaking the build at all costs.

- Deploy

-

You can get a new release after every new task passes through this cycle or if you decide to group a few. We are in the DevOps age, with continuous deployment as the new normal, so deploying every task to production makes a lot of sense.

I always imagine the cycle like this:

As you can see, this perfect workflow aligns perfectly with the DevOps spirit. It is all about shortening the task cycle times (in Kanban style), putting new stuff in production as soon as possible, deploying new software as a strongly rooted part of your daily routine, and not an event as it still is for many teams.

Our secret sauce for all these is the cycle I described above. That can be stated as follows: The recipe to safely deliver to production continuously: short-lived task branches (16h or less) that are reviewed and tested before being merged to main.

Workflow dynamics

How does all the above translate to version control? The "One task - one branch" chapter is dedicated to this, but let’s see a quick overview.

I’ll start after you create a task for a new work to be done, and you create a new branch for it:

Notice that we recommend a straightforward branch naming convention: a prefix (task in the example) followed by the task number in the issue tracker. Thus, you keep full traceability of changes, and it is as simple as it can be. Remember, Plastic branches can have a description too, so there is room to explain the task. I like to copy the task headline from the issue tracker and put it there, although we have integrations with most issue trackers that will simply do that automatically if you set it up.

Now, you check in the changes you make to implement your task. Your task will look as follows (if it is a long task taking a few hours, you should have way more than a couple of checkins). Notice that, the main branch has also evolved (I’m just doing this to make the scenario more realistic).

After more work, your task is completed in terms of development. You mark it as solved with an attribute (status) set to the task. Alternatively, you can mark it as completed in your issue tracker. It all depends on your particular toolset and how you will actually end up implementing the workflow.

Now it’s the reviewer’s turn to look at your code and see if they can spot any bugs or something in your coding style or particular design that should be changed.

As I described earlier, once the review is ok, a peers could do a validation too.

If everything looks good, the automatic part kicks in; your code merges and is submitted to the CI system to build and test.

Two important things to note here:

-

The dashed arrows mean that the new changeset is temporary. We prefer not to check in the merge before the tests pass. This way, we avoid broken builds.

-

We strongly encourage you to build and pass tests on the merged code. Otherwise, tests could pass in isolation in

task1213but ignore the new changes on the main branch.

Then the CI will take its time to build and pass tests depending on how fast your tests are. The faster, the better!

If tests pass, the merge will be checked in (confirmed), and your task branch will look something like this:

Notice the status is now set to merged. Instead of an attribute set to the branch, you could track the status in your issue tracker (or on both, as we do internally).

If the new release is ready to be deployed, the new changeset on main is labeled as such and the software deployed to production (or published to our website as we do with Plastic SCM itself):

How to implement

By now, you must have a bunch of questions:

-

What parts of what you describe are actually included in Plastic?

Easy. Plastic is a version control. So we help you with all the branching and merging. Branching and merging (even the visualization you saw in the examples above) are part of the product. Plastic is not a CI system, but it is a coordinator (thanks to mergebots, more on that later), so it can trigger the builds, automate the merges, perform the labeling, and more.

-

What CI systems does Plastic integrate with?

Virtually all of them because Plastic provides an API. But out of the box, it integrates with Jenkins, Bamboo, and TeamCity.

-

You mentioned you have a CI coordinator. Can’t I simply just go and plug my own CI?

Of course, you can plug in your own CI and try to implement the cycle above. We have done a lot of times with customers.

-

Do I need a CI system and an issue tracker to implement task branches?

If you really want to take full advantage of the cycle above and complete automation, you should. But you can always go manually: you can create task branches, work on them, and have someone in the team (integrator/build-master) doing the merges. That’s fine, but not state of the art. We used to do it this way ourselves some time ago.

Where are you now?

You might be ahead of the game I described above. If that’s the case, we still hope you can fit Plastic into your daily work.

If that’s not the case, if you think this is a good workflow to strive for, then the rest of the book will help you figure out how to implement it with Plastic SCM.

Are task branches mandatory in Plastic SCM?

Nope! Plastic is extremely flexible and you’re free to implement any pattern you want.

But, as I mentioned in the introduction, I’m going to be prescriptive and recommend what we truly believe to work.

We strongly trust task branches because they blend very well with modern patterns like trunk based development, but we’ll cover other strategies in the book.

For instance, many game teams prefer working on a single branch, always checking in the main branch (especially artists), which is fine.

What is Plastic SCM

Plastic SCM is a full version control stack designed for branching and merging. It offers speed, visualization, and flexibility.

Plastic is perfect for implementing "task branches," our favorite pattern that combines really well with trunk-based development, DevOps, Agile, Kanban, and many other great practices.

When we say "full version control stack" we mean it is not just a bare-bones core. Plastic is the repository management code (the server), the GUI tools for Linux, macOS, Windows, command line, diff tools, merge tools, web interface, and cloud server. In short, all the tools you need to manage the versions of your project.

Plastic is designed to handle thousands of branches, automate merges other systems can’t, provide the best diff and merge tools, (we’re the only ones providers of semantic diff and merge). So you can work centralized or distributed (Git-like, or SVN/P4 like), to deal with huge repos (4TB is not an issue) and be as simple as possible.

Plastic is not built on top of Git. It is a full stack so it is compatible with Git but doesn’t share the codebase. It is not a layer on top of Git like GitHub, GitLab, BitBucket, the Microsoft stack, and other alternatives. It is not just a GUI either like GitKraken, Tower and all the other Git GUI options. Instead, we build the entire stack. And, while this is definitely hard, it gives us the know-how and freedom to tune the entire system to achieve things others simply can’t.

Finally, explaining Plastic SCM means introducing the team that built it: we are probably the smallest team in the industry building a full version control stack. We are a compact team of developers with, on average, +8 years of experience building Plastic. It means a low turnover, highly experienced group of people. You can meet us here.

We don’t want to capture users to sell them issue trackers, cloud storage, or build a social network. Plastic is our final goal. It is what makes us tick and breathe. And we put our souls into it to make it better.

Why would someone consider Plastic?

You have other version control options, mainly Git and Perforce.

You can go for bare Git, choose one of the surrounding Git offerings from corporations like Microsoft and Atlassian, or select Perforce. Of course, you can alternatively stick to SVN, CVS, TFS, Mercurial, or ClearCase… but we usually only find users moving away from these systems, not into them anymore.

Why would you choose Plastic instead of the empire of Git or the well-established Perforce (especially since they are in the gaming industry)?

Plastic is a unique software that high-performance teams who want to get the best out of version control use. It’s for teams that need to do things differently than their competitors who have already gone to one of the mainstream alternatives.

What does this mean exactly?

-

Do you want to work distributed like Git but also have teams of team members working centralized? Git can’t solve your issue because it can’t do real centralized, and Perforce is not strong in a distributed environment.

-

Does your team need to handle huge files and projects? This is very common in the gaming industry. Git can’t do big files and projects. Perforce is a good option but it lacks the super-strong branching and merging of Plastic (again, not good at distributed environments).

-

Do you regularly face complex merges? Plastic can handle merge cases out of the scope of Git and Perforce. Besides, we develop the best diff and merge tools you can find, including the ability to even merge code moved across files (semantic diff and merge, our unique technology).

-

Do you need fast Cloud repos located as close to your location as possible? Plastic Cloud lets you choose from a list of more than 12 data centers.

-

Finely-grained security? Plastic is based on access control lists. Git can’t do this by design. Perforce is good at this, but doesn’t reach the access control list level.

-

Performance: Plastic is super-fast, even with repos that break Git or slow down Perforce. Again, a design choice.

-

Excellent support: Our support is part of the product. This is how we see it. Plastic is great, but what really makes it beat our competitors is how we care about users. We answer more than 80% of questions in less than one hour. 95% in under eight hours. And you can talk to real team members, not just a call center, but real product experts who will help you figure out how to work better will help you solve issues, or will simply listen to your suggestions.

-

Responsive release cycles: We release faster than anyone else (several releases a week) to respond to our users' needs.

The list goes on and on, but you get the idea.

Who is using Plastic?

If Git is eating up the world of software development, who is selecting a niche player as Plastic?

Plastic is used by a wide range of teams: Companies with 3000+ developers, small shops with just two programmers starting up the next big game, and the entire range in between. Teams with 20-50 are very common.

What is version control

Version control is the operating system of software development.

We see version control as the cornerstone the rest of the software development sits upon. It takes care of:

-

The project structure and contents that editors, IDEs and compilers will use to build.

-

CI systems that grabs changes, trigger builds and deploy.

-

Issue trackers and project management tools.

-

Code review systems that access the changes to review and collaborate to deliver the software quickly (DevOps).

Version control is much more than a container. It is a collaboration tool. It is what team members will use to deliver each change. Properly used, it can shape the way teams work, enabling faster reviews, careful validation and frequent releases.

Version control is also a historian, the guardian of the library of your project. It contains the entire evolution of the project and can track handy information of why changes happened. This is the key for improvement: those who don’t know their history are condemned to repeat it.

We believe there is an extraordinary value in the project history, and we are only seeing the tip of the iceberg of what’s to come in terms of extracting value from it.

Plastic SCM Editions

Of course, the different editions we offer in Plastic SCM are crystal clear in our minds 😉. But sometimes, explanations are needed. You can find all the detailed info about different editions here.

Here is a quick recap:

| Type | Name | Who it is for |

|---|---|---|

Paid and Free |

Cloud Edition |

Teams who don’t want to host their own Plastic SCM server. They can have local repos and push/pull to the Cloud. Or they can directly checkin/update to the Cloud. You get the storage, the managed cloud server, and all the Plastic SCM software with the subscription. Cloud Edition is dynamically licensed per user. This means if one month nobody in your team uses Plastic, you don’t pay for it, just for the repos stored in the cloud, if any. Cloud Edition is FREE up to a given amount of monthly users and GB of storage. |

Paid |

Enterprise Edition |

Teams who host their own on-premises server. The subscription comes with all the software, server, tools, and several optimizations for high performance, integration with LDAP, proxy servers (the kind of things big teams and corporations need to operate). |

Plastic SCM is licensed per user, not server or machine. This means a developer can use a single license on macOS, Linux, and Windows and pays only one license. It also means you can install as many servers as you need without extra cost.

How to install Plastic

Installing Plastic SCM is straightforward: Navigate go to our downloads page and pick the right installer for your operating system. After that, everything is super easy. If you are on Linux, complete the 2 instructions for your specific distro flavor.

If you are joining an existing project

If you are just joining an existing project, you’ll probably just need the client.

-

Ensure you understand whether you will work centralized (direct checkin) or distributed (local checkin to a local repo, then push/pull).

-

Check the Edition your team is using and ensure you install the right one. If they are using Cloud Edition, you only need that. If they are in Enterprise, ensure you grab the correct binary. It is not much drama to change later, but if you are reading this, you already have the background to choose correctly 🙂

-

If you are using Enterprise and you will work distributed (push/pull), you’ll need to install a local server.

If you are tasked to evaluate Plastic

Congrats! You are the champion of version control of your team now. Well, we’ll help you move forward. Here are a few hints:

-

Check the edition that best suits your needs.

-

You’ll need to install a server. The default installation is fine for production, it comes with our Jet storage which is the fastest. If it is just you evaluating, you can install everything on your laptop, unless you want to run actual performance tests simulating real loads. But, functionality-wise, your laptop will be fine.

-

You’ll need a client, too; GUIs and CLIs come in the same package. Easy!

Detailed installation instructions

Follow the installation and configuration guide to get detailed instructions of how to install Plastic on different platforms.

How to get help

It is important to highlight that you can contact us if you have any questions. Navigate to our page support and select the best channel.

-

It can be an email to support (you can do that during evaluation).

-

Check our forum.

-

Create a ticket in our support system if you prefer.

-

You can also reach us on Twitter @plasticscm.

You should know that we are super responsive! You’ll talk to the actual team and we care a lot about having an extraordinary level of response. Our stats say we answer 80% of the questions in under one hour and more than 95% in under eight hours.

Communication is key for a product like Plastic SCM. We aren’t a huge team hiding our experts behind several layers of first responders. You’ll have the chance to interact with the real experts. And it means not only answering simple questions, but also recommending to teams the best way of working based on their particularities.

By the way, we are always open to suggestions too, 😉

Command line vs. GUI

In many version control books, there are many command-line examples. Look at any book on Git, and you’ll find plenty of them.

Plastic is very visual because we spend lots of effort in the core and in the GUIs. That’s why traditionally most of our docs and blog posts were very GUI-centric.

In this guide, we will try to use the command line whenever it helps to better explain the underlying concepts, and we’ll keep very interface agnostic and concept-focused whenever possible. But, since GUIs are key in Plastic, expect some screenshots, too 🙂

A Plastic SCM primer

Let’s get more familiar with Plastic SCM before covering the recommended key practices about navigating branching, merging, pushing, and pulling.

This chapter aims to get you started with Plastic and learn how to run the most common operations that will help you during the rest of the book.

Get started

I’m going to assume you’ve already installed Plastic SCM on your computer. If you need help installing, I recommend you follow our version control installation guide.

The first thing you need to do is to select a default server and enter the correct credentials to work on it.

If you use the command line, you can run clconfigureclient.

If you use the GUI, the first thing you will see is a screen like this:

If you have to enter the server, decide if you want to connect through SSL and then enter your credentials.

After that, the GUI guides you through creating your first workspace connected to one of the repos on the server you selected.

|

About the GUI

As you saw in the figure above, we’ll be using mockups to represent the GUI elements instead of screenshots of the actual Linux, macOS or Windows GUIs. I prefer to do this for two reasons: first, to abstract this guide from actual GUI changes, and avoid certain users feeling excluded. I mean, if I take screenshots in Windows, then Linux and macOS users will feel like second-class citizens. But on the other hand, if I add macOS screenshots, Windows and Linux users will be uncomfortable. So, I finally decided to show mockups that will help you find your way on your platform of choice. |

Notice that I entered localhost:8087 as the server. Let me explain a little bit about this:

-

The server will typically be "local", for Cloud Edition users, without a port number. This is because every Cloud Edition comes with a built-in local server and a simplified way to access it.

-

If you are evaluating Enterprise Edition and you installed a server locally in your machine,

localhost:8087will default. -

If you are connected to a remote server in your organization, your server will be something like

skull.codicefactory.com:9095, which is the one we use. -

If you connect directly to a Plastic Cloud server, it will be something like

robotmaker@cloud, whererobotmakerwill be the name of your cloud organization instead.

3 key GUI elements

Before moving forward, I’d like to highlight the three most essential elements you will use in the GUIs.

-

Workspace Explorer (previously known as "items view").

-

Pending Changes, a.k.a. Checkin Changes.

-

Branch Explorer.

Workspace Explorer

This view is like a Windows Explorer or macOS Finder with version control information columns.

You can see the files you have loaded into your workspace and the date when they were written to disk, and also the changeset and branch where they were created (although I’m not including these last two in the figure above).

It is the equivalent of running a cm ls command.

cm ls

0 12/28/2018 14:49 dir br:/main .

0 11/29/2018 14:49 dir br:/main art

0 12/10/2018 18:23 dir br:/main codeThe most exciting things about the Workspace Explorer are:

-

It comes with a finder that lets you locate files quickly by name patterns. CTRL-F on Windows or Command-F on macOS.

-

There is a context menu on every file and directory to trigger specific actions:

Actions are enabled depending on the context (for example, you can’t check the history of a private file).

Pending Changes

This view is so useful! You can perform most of your daily operations from here. In fact, I strongly recommend using this view and not the Workspace Explorer to add files and check in changes.

The layout of Pending Changes in all our GUIs looks like this:

-

There are important buttons at the top to refresh and find changes (although you can also configure all GUIs to do auto-refresh), checkin and undo changes.

-

Then, there is a section to enter checkin comments. This is the single most important thing to care about when you are in this view: ensure the comment is relevant. More about checkin comments later in the chapter about task branches.

-

And finally, the list of changes. As you can see, Pending Changes split the changes in "changed", "deleted", "moved," and "added". Much more about these options in the "Finding changes" section in the "Workspaces" chapter.

The other key thing about Pending Changes is the ability to display the diffs of a selected file:

Plastic comes with its own built-in diff tool. And as you can see, it can do amazing things like tracking methods that were moved. We are very proud of our SemanticMerge technology.

Most of the Plastic SCM actions you will do while developing are done from Pending Changes. You simply switch to Plastic, see the changes, check in, and get back to work!

Branch Explorer

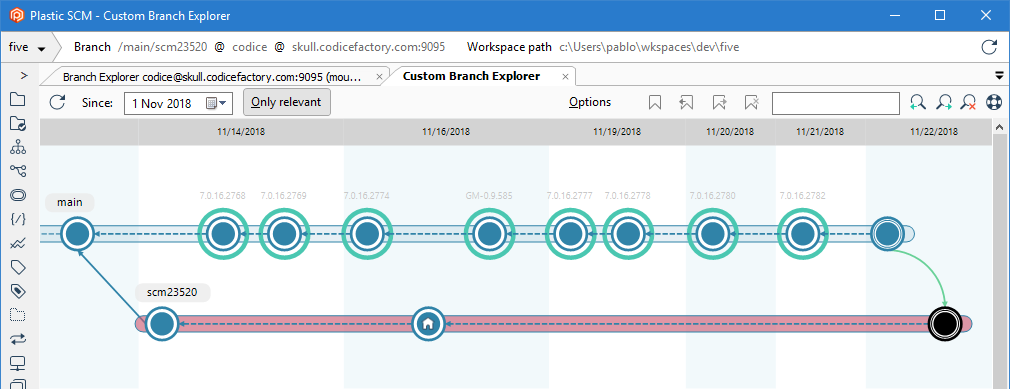

The Branch Explorer diagram is our crown jewel. We are super proud of it and the way it can render the evolution of the repos. The Branch Explorer (a.k.a. BrEx) view has the following layout:

Every circle in the graphic is a changeset. The changesets are inside containers that represent the branches. The diagram evolves from left to right, where changesets on the right are newer than those on the left. The actual Branch Explorer in the GUIs draws columns with dates to clarify this.

The green arrows are merges and the blue ones connect changesets with a "is the parent of" relationship.

The green donuts surrounding changesets are labels (equivalent to Git tags).

The Branch Explorer lets you filter the date from where you want to render the repo, and it has zoom, a search box, and also an action button to show "only relevant" changesets. It also shows a "house icon" in the changeset your workspace is currently on (this is true for regular Plastic, not Gluon, as you will see in the "Partial Workspaces" section).

By the way, if you click "Only relevant", the Branch Explorer will compact as the following figure shows, since the "relevant changesets" are:

-

Beginning of a branch.

-

End of a branch.

-

Labeled changeset.

-

Source of a merge.

-

Destination of a merge.

This way, it is very easy to make large diagrams more compact by hiding "non-relevant" changesets.

From the Branch Explorer context menus, you can perform several key actions. You can select every branch, changeset, label and right-click on it to discover their actions.

Typical actions you can perform are:

-

Switch your workspace to a different branch, changeset, or label.

-

Diff branches, changesets, and labels.

-

Push and pull branches.

-

Merge branches and changesets.

-

Actions to set attributes to branches.

There are other views but…

In my opinion, you can perform all the daily actions just using Pending Changes and the Branch Explorer, and maybe a little bit of the Workspace Explorer. If I had to remove everything except what was absolutely essential, Plastic would run just with these three.

There are other views like the Branches View, which lists branches more traditionally (a list), Changesets View, Attributes, Labels… but you can use the Branch Explorer instead for a more visual view.

Listing repos on different servers

In the command line, run:

cm repository listIn my case, this is the output of the command:

cm repository list

quake@192.168.221.1:6060

quake_replicated@192.168.221.1:6060

quake_from_git@192.168.221.1:6060

ConsoleToast@192.168.221.1:6060

PlasticNotifier@192.168.221.1:6060

asyncexample@192.168.221.1:6060

geolocatedcheckin@192.168.221.1:6060

p2pcopy@192.168.221.1:6060

udt@192.168.221.1:6060

wifidirect@192.168.221.1:6060

PeerFinder@192.168.221.1:6060The repository [list | ls] command shows the list of the repos on your default server (the one you connected to initially).

But, it also works if you list the reps of another server:

>cm repository list skull:9095

Enter credentials to connect to server [skull:9095]

User: pablo

Password: *******

codice@skull:9095

pnunit@skull:9095

nervathirdparty@skull:9095

marketing@skull:9095

tts@skull:9095I used the command to connect to skull:9095 (our main internal server at the time of this writing, a Linux machine) and Plastic asked me for credentials. The output I pasted is greatly simplified since we have a list of over 40 repos on that server.

You can also use repository list to list your cloud repos. In my case:

>cm repository list codice@cloud

codice@codice@cloud

nervathirdparty@codice@cloud

pnunit@codice@cloud

marketing@codice@cloud

plasticscm.com@codice@cloud

devops@codice@cloud

installers@codice@cloudThis is again a simplified list. This time the command didn’t request credentials since I have a profile for the codice@cloud server.

You get the point; every server has repos, and you can list them if you have the permissions.

For you most likely, a cm repository list will show something like:

cm repository list

default@localhost:8087By default, every Plastic installation creates a repo named default so you can start doing tests with it instead of starting from a repo-less situation.

From the GUIs, there are "repository views" where you can list the available repos:

There is always a way to enter a different server that you want to explore and also a button to create new repos if needed.

Create a repo

Creating a new repository is very simple:

cm repository create myrepo@localhost:8087Where myrepo@localhost:8087 is a "repo spec" as we call it.

Using the GUI is straightforward, so I won’t show a screenshot; it is just a dialog where you can type a name for the repo 🙂.

Of course, you can only create repositories if you have the mkrep permission granted on the server.

Create a workspace

A workspace is a directory on a disk that you will use to connect to a repository. Let’s start with a clean workspace.

If you run:

cm workspace create demowk c:\users\pablo\wkspaces\demowkPlastic will create a new workspace named demowk inside the path you specified.

It is very typical to do this instead:

cd wkspaces

mkdir demowk

cd demowk

cm workspace create demowk .Notice I didn’t specify which repository to use for the workspace. By default, the first repo in the default server is used, so in your case it will most likely be your default repo. There are two alternatives to configure the repo:

cm workspace create demowk . --repository=quake@localhost:9097Or, simply create it in the default location and then switch to a different repo:

cm workspace create demowk .

cm switch main@quakeWorkspaces have a name and a path for easier identification. And, you can list your existing workspaces with cm workspace list.

>cm workspace list

four@MODOK c:\Users\pablo\wkspaces\dev\four

five@MODOK c:\Users\pablo\wkspaces\dev\five

tts@MODOK c:\Users\pablo\wkspaces\dev\tts

plasticscm-com@MODOK c:\Users\pablo\wkspaces\dev\plasticscm-com

doc-cloud@MODOK c:\Users\pablo\wkspaces\dev\doc-cloud

plasticdocu_cloud@MODOK c:\Users\pablo\wkspaces\dev\plasticdocu_cloud

mkt_cloud@MODOK c:\Users\pablo\wkspaces\mkt-sales\mkt_cloud

udtholepunch@MODOK c:\Users\pablo\wkspaces\experiments\udtholepunchThe previous figure is a subset of my local workspaces at my modok laptop 🙂.

From the GUI, there’s always a view to list and create workspaces:

As you see, there can be many workspaces on your machine, and they can be pointing to different repos both locally and on distant servers. Plastic is very flexible in that sense as you will see in "Centralized & Distributed."

Creating a workspace from the GUI is straightforward, as the following figure shows:

Suppose you create a workspace to work with an existing repo. Just after creating the workspace, your Branch Explorer places the home icon in changeset zero. Initially, workspaces are empty until you run a cm update (or GUI equivalent) to download the contents. That’s why initially, they point to changeset zero.

Adding files

Let’s assume you just created your workspace demowk pointing to the empty default repo on your server. Now, you can add a few files to the workspace:

echo foo > foo.c

echo bar > bar.cThen, check the status of your workspace with cm status as follows:

>cm status

/main@default@localhost:8087 (cs:0 - head)

Added

Status Size Last Modified Path

Private 3 bytes 1 minutes ago bar.c

Private 3 bytes 1 minutes ago foo.cThe GUI equivalent will be:

You can easily check in the files from the GUI by selecting them and clicking checkin.

From the command line, you can also do this:

cm add foo.cWhich will display the following status:

>cm status

/main@default@localhost:8087 (cs:0 - head)

Added

Status Size Last Modified Path

Private 3 bytes 3 minutes ago bar.c

Added 3 bytes 3 minutes ago foo.cNote how foo.c is now marked as "added" instead of simply "private" because you already told Plastic to track it, although it wasn’t yet checked in.

If you run cm status with the --added flag:

>cm status --added

/main@default@localhost:8087 (cs:0 - head)

Added

Status Size Last Modified Path

Added 3 bytes 5 minutes ago foo.cfoo.c is still there while bar.c is not displayed.

Ignoring private files with ignore.conf

There will be many private files in the workspace that you won’t need to check in (temporary files, intermediate build files (the .obj), etc). Instead of seeing them repeatedly in the GUI or cm status, you can hide them by adding them to ignore.conf. Learn more about ignore.conf in "Private files and ignore.conf."

Initial checkin

If you run cm ci from the command line now, only foo.c will be checked in unless you use the --private modifier or run cm add for bar.c first. Let’s suppose we simply checkin foo.c:

>cm ci -c "Added foo.c"

The selected items are about to be checked in. Please wait ...

| Checkin finished 33 bytes/33 bytes [##################################] 100 %

Modified c:\Users\pablo\wkspaces\demowk

Added c:\Users\pablo\wkspaces\demowk\foo.c

Created changeset cs:1@br:/main@default@localhost:8087 (mount:'/')Notice how I added a comment for the checkin with the -c modifier. This is the comment that you will see in the Branch Explorer (and changeset list) associated with the changeset 1 you just created.

Checkin changes

Let’s now make some changes to our recently added foo.c. Then run cm status:

>cm status

/main@default@localhost:8087 (cs:1 - head)

Changed

Status Size Last Modified Path

Changed 1024 bytes 1 minutes ago foo.c

Added

Status Size Last Modified Path

Private 1024 bytes 5 minutes ago bar.cAs you can see, foo.c is detected as changed, and the old bar.c is still detected as ready to be added (private).

From the GUI, the situation is as follows:

And, you’ll be able to easily select files, diff them, and check in.

Undoing changes

Suppose you want to undo the change done to foo.c.

From the GUI, it will be as simple as selecting foo.c and clicking "Undo."

Important: Only the checked elements are undone.

From the command line:

>cm undo foo.c

c:\Users\pablo\wkspaces\demowk\foo.c unchecked out correctly

>cm status

/main@default@localhost:8087 (cs:1 - head)

Added

Status Size Last Modified Path

Private 1024 bytes 6 minutes ago bar.cCreate a branch

Now, you already know how to add files to the repo and check in, so the next thing is to create a branch:

cm branch main/task2001Creates a new branch child of the main branch, and at this point, it will be a child of changeset 1.

There is a --changeset modifier to specify the starting point of your new branch.

Now your Branch Explorer will be as follows:

Your workspace is still on the main branch because you haven’t switched to the new branch.

So let’s switch to the new branch this way:

> cm switch main/task2001

Performing switch operation...

Searching for changed items in the workspace...

Setting the new selector...

Plastic is updating your workspace. Wait a moment, please...

The workspace c:\Users\pablo\wkspaces\demowk is up-to-date (cset:1@default@localhost:8087)And now the Branch Explorer looks like:

The home icon located in the new main/task2001 branch that is still empty.

Let’s modify foo.c in the branch by making a change:

>echo changechangechange > foo.c

>cm status

/main/task2001@default@localhost:8087 (cs:1 - head)

Changed

Status Size Last Modified Path

Changed 21 bytes 8 seconds ago foo.c

Added

Status Size Last Modified Path

Private 1024 bytes 6 minutes ago bar.cAnd then a checkin:

>cm ci foo.c -c "change to foo.c" --all

The selected items are about to be checked in. Please wait ...

| Checkin finished 21 bytes/21 bytes [##################################] 100 %

Modified c:\Users\pablo\wkspaces\demowk\foo.c

Created changeset cs:2@br:/main/task2001@default@localhost:8087 (mount:'/')The Branch Explorer will reflect the new changeset:

From the GUI, it is straightforward to create new branches and switch to them:

The Branch Explorer provides context menus to create new branches from changesets and branches and switch to them. (I’m highlighting the interesting options only, although the context menus have many other interesting options to merge, diff, etc.).

The dialog to create branches in the GUIs (Linux, macOS, and Windows) looks like this:

Here you can type the branch name and an optional comment. Branches can have comments in Plastic, which is very useful because these comments can give extra info about the branch and be rendered in the Branch Explorer.

As you see, there is a checkbox to switch the workspace to the branch immediately after creation.

If you look carefully, you’ll find two main options to create branches: manual and from the task. The image above shows the manual option. Let’s see what the "from task" is all about.

You can connect Plastic to a variety of issue trackers, and one of the advantages is that you can list your assigned tasks and create a branch directly from them with a single click. Learn more about how to integrate with issue trackers by reading the Issue Trackers Guide.

Diffing changesets and branches

The next key tool to master in Plastic is the diff. You’ll probably spend more time diffing and reviewing changes than actually making checkins.

Diffing from the command line

You would use the cm diff command to diff your first changeset:

>cm diff cs:1

A "foo.c"In the above figure, in changeset 1, foo.c is added.

Now, if you diff changeset 2:

>cm diff cs:2

C "foo.c"The command shows that foo.c is modified on that changeset.

The cm diff command is responsible for diffing both changesets/branches and actual files.

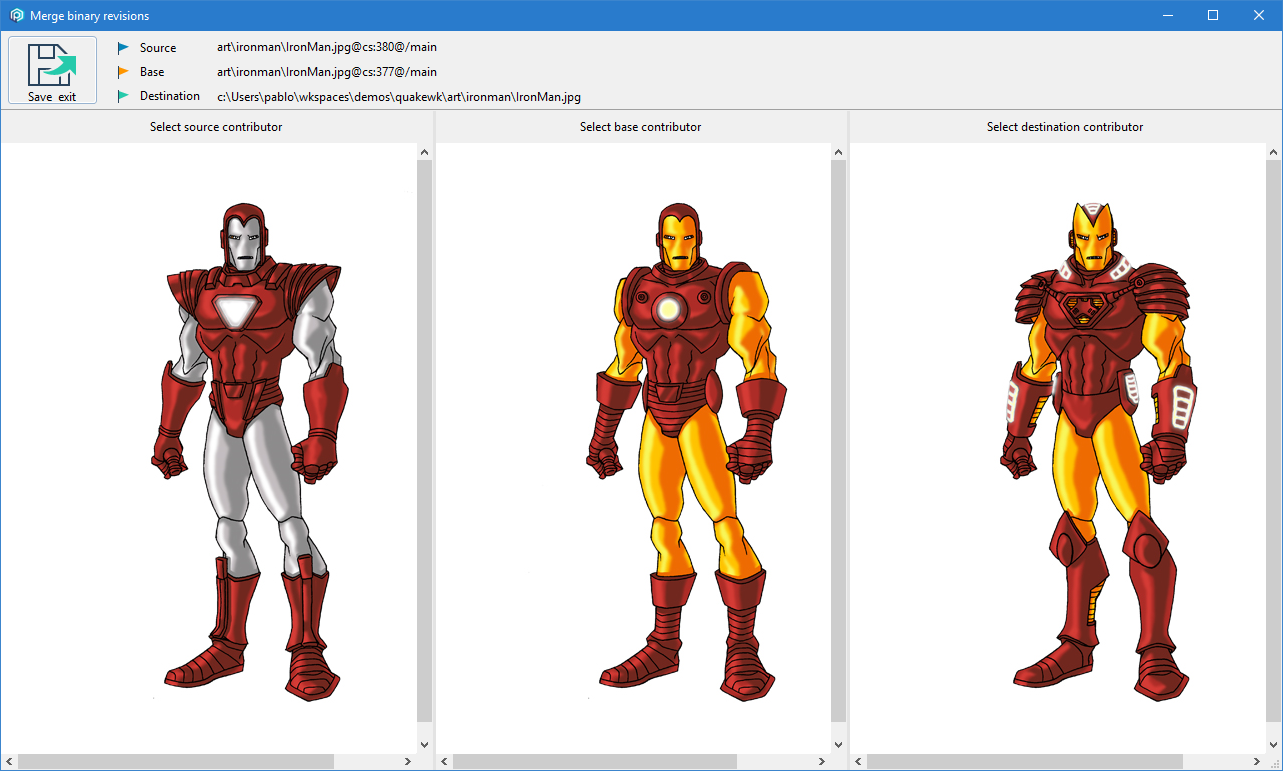

Since the changes made were pretty basic so far, the diffs will be very simple. So let me show you a diff from a branch I have on one of my local repos:

>cm diff br:main/scm23606

C "art\me\me.jpg"

C "art\ironman\IronMan.jpg"

C "code\plasticdrive\FileSystem.cs"

M "code\cgame\FileSystem.cs" "code\plasticdrive\FileSystem.cs"As you can see, the diff is more complex this time, and it shows how I changed three files and moved one of them in the branch main/scm23606.

So far, we only diffed changesets and branches, but it is possible to diff files too. So, I’m going to print the revision ids of the files changed in scm23606 to diff the contents of the files:

>cm diff br:main/scm23606 --format="\{status} \{path} \{baserevid} \{revid}"

C "art\me\me.jpg" 28247 28257

C "art\ironman\IronMan.jpg" 27600 28300

C "code\plasticdrive\FileSystem.cs" 28216 28292

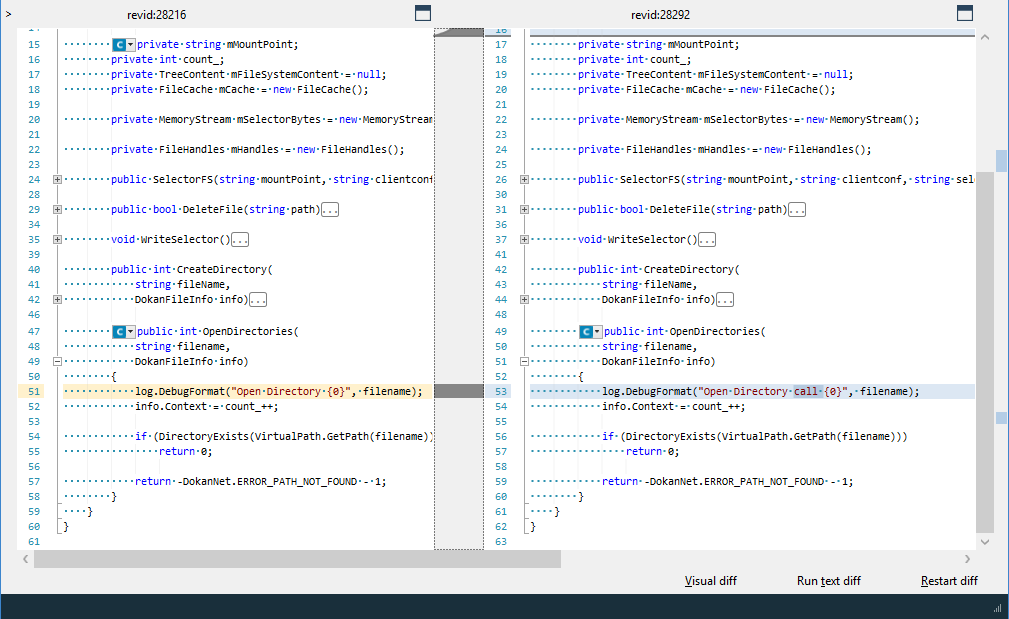

M "code\plasticdrive\FileSystem.cs" -1 28292Then, I can diff the change made in FileSystem.cs as follows:

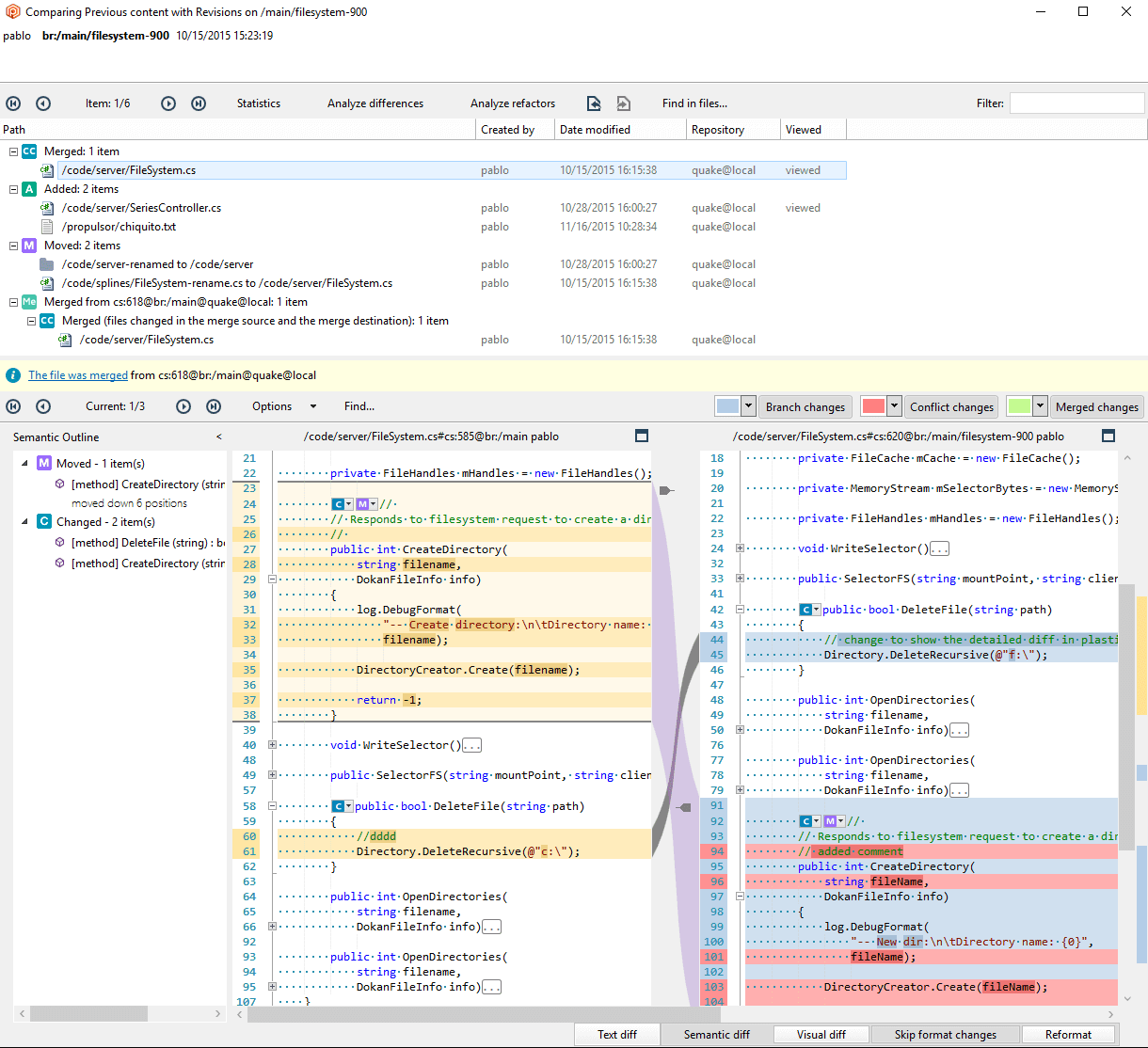

>cm diff revid:28216 revid:28292Instead of a text diff printed on your console, cm diff launches the diff tool configured for text files. Plastic comes with a built-in diff and merge tool we call Xdiff/Xmerge, and then a GUI like the following displays:

We think GUIs are better tools to show diffs than the typical unified diff displayed by many tools, and that’s why we launch a GUI even when the diff is requested from the command line.

If you really want to see a unified diff printed on the console (because you need to run the diff from a terminal without GUI support, for instance), check our Unix diff from console documentation.

Explore the cm diff help to find all the available options. For example, you can diff branches, individual files, changesets, labels (equivalent to diffing the changeset), or any pair of changesets that you want to compare changes.

>cm diff helpDiffing from the GUIs

Well, it is no secret that we put much more attention into GUIs than the command line when it comes to diffing. This is because we think that 99% of the time, developers will diff using a Plastic GUI instead of the command line.

Diffing changesets and branches is very simple from the Branch Explorer; look at the following figure, where you’ll see how there are diff options in the context menus of branches and changesets (and labels).

Diffing an entire branch is simple. Right-click on it to select it (you’ll see that it changes color) and then select the "diff branch" option.

As the following figure shows, diffing a branch is the equivalent of diffing its head changeset and its parent changeset.

The figure shows how diffing branch task1209 is equivalent to diffing changesets 13 and 23.

You can see the selected changesets are colored in violet; when you multi-select changesets, they are colored differently to highlight the fact that many csets are selected. And, you can right-click and run "diff selected."

The diffs of changesets and branches in the GUI are very powerful GUIs like the following mockup shows:

The changeset/branch diff window first summarizes the changes on a tree with changed, deleted, added, and moved, and then shows the actual side-by-side diff of the selected file.

One of the great advantages of the built-in diff in Plastic is that it can to do semantic diffs. This means that the diff can track moving code fragments for certain languages like C#, C++, C, and Java (and then several community-contributed parsers). For example, in the figure above, you can see how the CreateDirectory method was identified as moved to a different location.

Learn more about what "semantic version control" can do.

Merge a branch

Let’s rewind to where we left our straightforward example where we created branch task2001 and made a checkin on it. What if we now want to merge it back to the main branch?

Merging is effortless; you switch your workspace to the destination branch and merge from the source branch or changeset. This is the most typical merge. There is another option, "merge to," which doesn’t require a workspace to run it, but we’ll start with the most common one.

Merging from the GUI

This is what our branch explorer looks like at this point with the main branch and task2001. If you remember, the workspace was in task2001, so the first thing we’ll do is switch to the main branch, by selecting the branch, right-clicking on it, and running the "Switch workspace to this branch" action.

Once the switch is complete, the Branch Explorer will look as follows, and we’ll right-click on task2001 to run a "merge from this branch":

The GUI will guide us through the merge process. In this case, there’s no possible conflict since there are no new changesets on the main branch that could conflict with task2001, so all we have to do is checkin the result of the merge. In the Pending Changes view, you’ll see a "merge link" together with the modified foo.c. Something like this:

See "Plastic merge terminology" in the merge chapter to learn what "replaced" means and also the other possible statuses during the merge.

Now, once the checkin is done, the Branch Explorer will reflect that the branch was correctly merged to main as follows:

We performed the entire merge operation from the Branch Explorer, but you can also achieve the same from the Branches view (list of branches) if you prefer.

Merging from the command line

By the way, it is also possible to merge from the command line. I will repeat the same steps explained for the GUI, but this time, running commands.

First, let’s switch back to the main branch:

>cm switch main

Performing switch operation...

Searching for changed items in the workspace...

Setting the new selector...

Plastic is updating your workspace. Wait a moment, please...

Downloading file c:\Users\pablo\wkspaces\demowk\foo.c (8 bytes) from default@localhost:8087

Downloaded c:\Users\pablo\wkspaces\demowk\foo.c from default@localhost:8087And now, let’s run the merge command:

>cm merge main/task2001

The file /foo.c#cs:2 was modified on source and will replace the destination versionThe cm merge command simply does a "dry run" and explains the potential merge conflicts.

To actually perform the merge we rerun with the --merge flag:

> cm merge main/task2001 --merge

The file /foo.c#cs:2 was modified on source and will replace the destination version

Merging c:\Users\pablo\wkspaces\demwk\foo.c

The revision c:\Users\pablo\wkspaces\demowk\foo.c@cs:2 has been loadedLet’s check the status at this point:

>cm status

/main@default@localhost:8087 (cs:1 - head)

Pending merge links

Merge from cs:2 at /main/task2001@default@localhost:8087

Changed

Status Size Last Modified Path

Replaced (Merge from 2) 21 bytes 14 seconds ago foo.c

Added

Status Size Last Modified Path

Private 6 bytes 7 minutes ago bar.cAs you can see, it says foo.c has been "replaced," same as the GUI did.

A checkin will confirm the merge:

>cm ci -c "merged from task2001"

The selected items are about to be checked in. Please wait ...

\ Checkin finished 0 bytes/0 bytes [##################################] 100 %

Modified c:\Users\pablo\wkspaces\demowk

Replaced c:\Users\pablo\wkspaces\demowk\foo.c

Created changeset cs:3@br:/main@default@localhost:8087 (mount:'/')And this way, we completed an entire branch merge from the command line 🙂.

Learn more

This was just an intro to show you how to perform a basic merge, but there is an entire chapter in this guide about the merge operation where you’ll learn all the details required to become an expert.

Annotate/blame a file

Annotate, also known as blame, tells you where each line of a file comes from. It is extremely useful to locate when a given change was made.

Consider the following scenario where a file foo.cs was modified in each changeset:

And now consider the following changes made to the file in each changeset:

I marked in green changes made in each of the revisions compared to the previous one and specified the author of each change at the top of the file.

The annotate/blame of the file in revision 16 will be as follows:

Take some time to scrutinize the example until you really understand how it works. Annotate takes the contents of the file in the changeset 16, then walk back the branch diagram diffing the file until the origin of each line is found.

In our case, it is clear that lines 10, 20, 40, 80, and 90 come from the changeset 12 where the file was initially added.

Then line 30, where Borja changed the return type of the Main function from void to int, belongs to changeset 13.

Line 50 is where Pablo changed Print("Hello"); by Print("Bye"), and it is attributed to changeset 15.

Then line 60 is marked in red because it was modified during a merge conflict; changeset 14 in main/task2001 modified the line differently than changeset 15 in main, so Jes??s had to solve the merge conflict and decided to modify the loop, so it finally goes from 1 to 16.

Finally, line 70 comes from changeset 14 in task2001 where Miguel modified the line to be Print(i+1);

Since Plastic always keeps branches (see "We don’t delete task branches") annotate tells you the task where a given change was introduced, which is great help to link to the right Jira or any issue tracker you might use.

Please note that the GUI will use a gradient to differentiate the most recent changes from the older ones.

Annotate/blame from command line and GUI

Annotating a file is easy from the GUI; Navigate to the Workspace Explorer, locate the file, right-click and select "annotate."

From the command line, run:

> cm annotate foo.cYou annotate a given revision of a file

You don’t annotate a file but a given revision of a file. For example, in the previous example, annotating the file in changeset 16 is not the same as changeset 14 or 15.

For example, if you annotate changeset 12, all the lines will be attributed to changeset 12 since this was where the file was added. If you annotate 13, all lines will belong to 12 except line 30 marked as created in 13.

If a line is modified several times, the line closer to the destination changeset will be marked as "creator" of the line.

Push and pull

Unless you’ve been living under a rock, you’ve heard of distributed version control (DVCS), and you know that the two key operations are push and pull.

I’m not going to explain what they mean in detail since we have a chapter dedicated to distributed operation, but I’ll just introduce the basics.

Suppose you create a new repository and want to clone the main branch created in the default repo to the new repository.

> cm repository create cloned@localhost:8087

> cm push main@default@localhost:8087 cloned@localhost:8087The cm push command pushes the main@default branch to the "cloned" repo.

Imagine that, later, some new changes are created in main@cloned, and you want to pull them to main@default.

> cm pull main@cloned@localhost:8087 defaultI used a couple of repos on my localhost server, but the following would be possible:

> cm push main@default@localhost:8087 default@codice@cloud

> cm push main@default@localhost:8087 newrepo@skull.codicefactory.com:9095Provided that the destination repos default@codice@cloud and newrepo@skull.codicefactory.com:9095 were previously created.

Pushing and pulling from the GUI is even easier since there are options to do that from every branch context menu in the Branch Explorer view and Branches view.

Finally, the GUI comes with a sync view to let you synchronize many branches in bulk. It is worthwhile to learn how the sync view works by reading the GUI reference guide.

Learn more

-

Master the most frequent command line actions by reading the command line guide.

Keep reading this guide to learn more details about Plastic SCM 🙂.

One task - one branch

Before we jump into how to do things in Plastic, I will describe in detail the work strategy we recommend to most teams. Once that is clear, finding your way through Plastic will be straightforward.

As we saw in the section "A perfect workflow", task branches are all about moving high-quality work quickly into production.

Each task will go through a strict process of code development, code review and testing before being safely deployed to production.

This chapter covers how to implement a successful branch per task strategy, including all the techniques and tactics we learned over the years.

Branch per task pattern

We call it task branches, but the actual branch per task strategy has been around for +20 years.

There are many different branching patterns but, over the years, we concluded task branches are really the way to go for 95% of the scenarios and teams out there.

The pattern is super simple; you create a new branch to work on for each new task in your issue tracker.

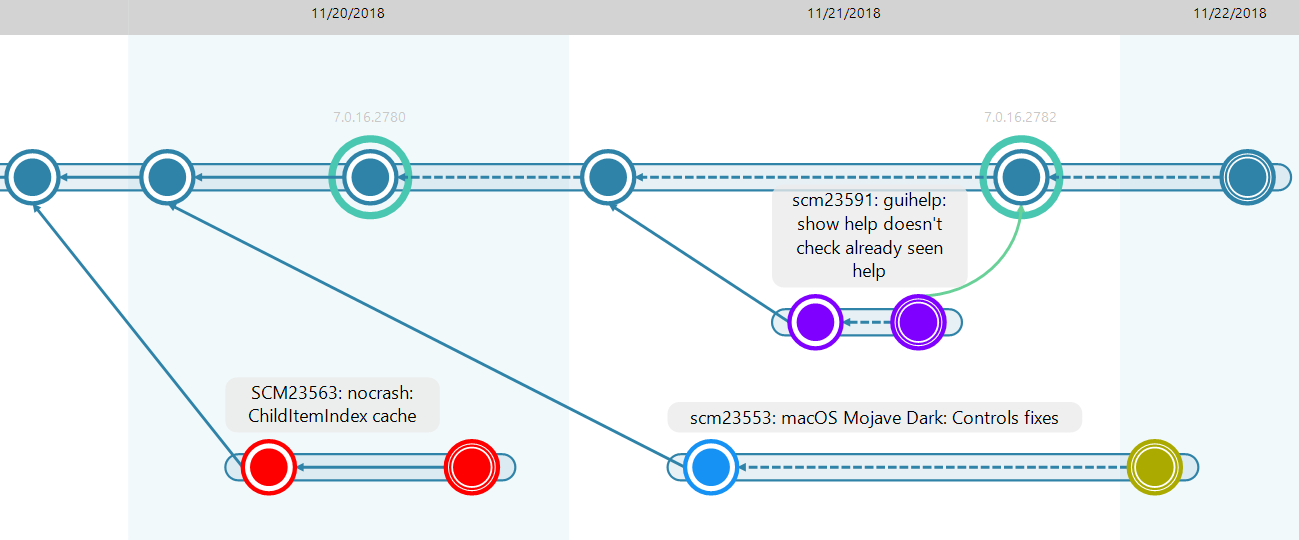

The following is a typical diagram (it perfectly fits with the Branch Explorer visualization in Plastic) of a branch per task pattern:

task1213 is already finished and merged back to main, task1209 is longer and still ongoing (and it has many changesets on it), and task1221 was just created, and the developer only performed a single checkin on it.

Branch naming convention

We like to stick to the following pattern: prefix + task number. That’s why the branches in the example above were named task1213, task1209, and task1221. "task" is the prefix and the number represents the actual task number in the associated issue tracker.

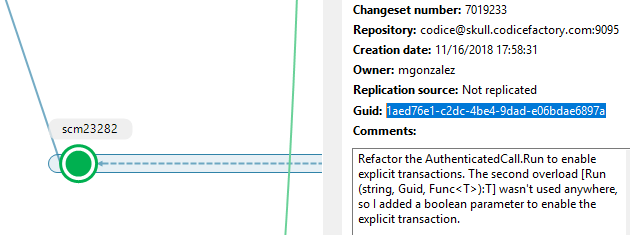

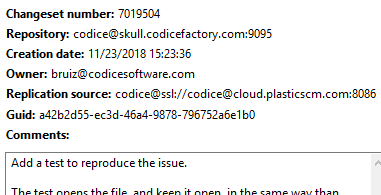

The following is an example from our repo:

You can see how we use the prefix "SCM" and then the task number. In our case, we use SCM because we build a source code management system 🙂. You see how some team members prefer SCM in uppercase, and others use lowercase… well; I believe it is just a matter of self-expression.

|

We also use the prefixes to link with the issue tracker, for example, with Jira. |

The screenshot also shows a description for each branch together with the number. This is because the Branch Explorer retrieves it from the issue tracker. You can also see the branch description by selecting "display branch task info".

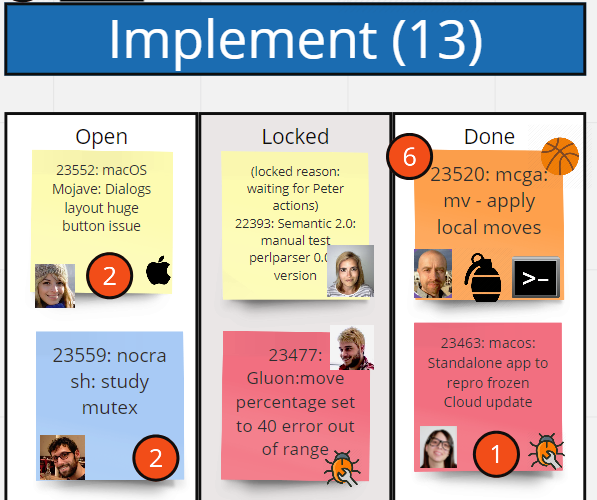

We see some teams using long branch names, which describes the task. Is that wrong? Not really, but we truly prefer to direct the conversation based on univocal numbers. In fact, see what our Kanban board looks like on any day: the entire discussion is always about tasks, and there’s no doubt where the associated code is.

Task branches are short

Remember the Scrum rule that says tasks shouldn’t be longer than 16 hours to ensure they are not delayed forever and keep the project under control? Well, we love that.

At the time of writing, we had just switched to Kanban for a couple of months after almost 300 sprints of two weeks each. We changed to Kanban to reduce task cycle times, but we like many practices of Scrum. And keeping tasks short is one of them.

Task branches must be closed quickly. It is great to have many small tasks you can close in just a few hours each. It is good to keep the project rhythm, to keep the wheels spinning, and never stop deploying new things. A task that spans for a week stops the cycle.

"But some tasks are long" – I hear you cry. Sure, they are. Splitting bigger tasks into smaller ones is an art and science in itself. You need to master it to get the best out of task branches and a solid overall continuous delivery cycle.

There are a few red flags to keep in mind:

-

Don’t create "machete cut" tasks. You ask someone to cut a task into smaller pieces, and then they create a pile of smaller ones that don’t make any sense in isolation and can’t be deployed independently. No, that’s not what we are looking for. Of course, creating shorter tasks from a bigger one can be daunting at times, but nothing you can’t solve throwing a little bit of brainpower to it.

-

Every team member needs to buy it. It is not just about "let’s make all tasks consistently shorter"; it is about understanding life is easier if you can close and deliver a small piece of a larger task today instead of struggling with it for a few days before delivering anything. It is all about openly communicating progress, avoiding silos, and removing "I’m at 80% of this" day after day. Predictability, responding to the problem quickly, and agile philosophy is under the "short branches" motto.

Task branches are not feature branches

I admit sometimes, I wrongly use both terms interchangeably. Feature branches got viral thanks to Git Flow in the early Git explosion days. Everyone seemed to know what a feature branch was, so sometimes I used it as a synonym for task branches. They are not.

A feature can be much longer than a task. It can take many days, weeks, or months to finish a feature. And it is definitely not a good idea to keep working on a branch for that long unless you want to end up with a big-bang integration.

I’m not talking just about merge problems (most of our reality here at Plastic is about making impossible merges easy to solve, so that’s not the problem), but about project problems. Delay a merge for a month, and you’ll spend tons of unexpected time making it work together with the rest of the code, and solving merge problems will be the least of your problems.

Believe me, continuous integration, task branches, shorter release cycles were all created to avoid big bangs, so don’t fall into that trap.

To implement a feature, you will create many different task branches, each of them will be merged back as soon as it is finished, and one day, the overall feature will be complete. And, before you ask: use feature toggles if the work is not yet ready to be public, but don’t delay integrations.

Keep task branches independent

Here is the other skill to master after keep task branches short: keep task branches independent.

Here is a typical situation:

You just finished task1213 and have to continue working on the project, so the obvious thing to do is simply continue where you left it, right?

Wrong.

This happens very often to new users. They just switched from doing checkins to trunk continuously and feel the urge to use their previous code, and even a bit of vertigo (merge-paranoia) if they don’t.

You have to ask yourself (or your teammates) twice: do you really need the code you just finished in task1213 to start task1209? Really? Really?

Quite often, the answer will be no. Believe me. Tasks tend to be much more independent than you first think. Yes, maybe they are exactly on the same topic, but you don’t need to touch exactly the same code. You can simply add something new and trust the merge will do its job.

There is a scenario where all this is clearer and more dangerous: suppose that 1213 and 1209 are bug fixes instead of tasks. You don’t want one to depend on the other. You want them to hit main and be released as quickly as possible; even if they touch the same code, they are different fixes. Keep them independent!

And now you also get the point of why keeping tasks independent matters so much. Look at the following diagram:

task1209 was merged before task1213. Why is this a problem? Well, suppose each task goes through a review and validation process:

-

task1209was reviewed and validated, so the CI system takes it and merges it. -

But,

task1213is still waiting. It wasn’t reviewed yet… but it reached main indirectly through1209.

See the problem?

You’re probably thinking: yes, but all you have to do is tell the team that 1209 doesn’t have to be merged before 1213 is ready.

I hear you. And it worked moderately well in the times of manual integration, but with CI systems automating the process now, you are just creating trouble. Now, you have to manually mark the task somehow so that it is not eligible for merge (with our mergebots and CI integrations, it would be just setting an attribute to the branch). You are just introducing complexity and slowing the entire process down. Modern software development is all about creating a continuous flow of high-quality changes. These exceptions don’t help.

What if you really need tasks to depend on each other

There will be cases where dependencies will be required. Ask yourself three times before taking the dependency for granted.

If tasks need to depend on each other, you need to control the order in which they’ll be integrated. If you are triggering the merges manually (discouraged),ensure there is a note for the build-master or integrator in charge.

If you have an automatic process in place, you’ll need to mark branches somehow so they are not eligible for merge by the CI system or the mergebot.

Techniques to keep branches independent

|

This is a pretty advanced topic, so if you are just finding your way through Plastic, it is better to come back to it when you have mastered the basics. |

There will be other cases where you’ll be able to keep tasks independent with a bit of effort.

Very often, when you need to depend on a previous task, you need just some of the changes done there.

Use cherry pick carefully to keep branch independent:

If that’s the case, and provided you were careful enough to isolate those changes in an isolated changeset (more on that in the "Checkin often and keep reviewers in mind" section), you can do a cherry pick merge (as I explain in the "Cherry picking" section).

A cherry pick will only bring the changes made in changeset 13, not the entire branch. The branches will continue being primarily independent and much safer to merge independently than before.

Of course, this means you must be careful with checkins, but that’s something we also strongly encourage.

Add a file twice to keep tasks independent:

There is a second technique that can help when you add a new file on task1213 and you really need this file in the next task. For example, it can be some code you really need to use. This is a little bit contrived, but it works: Add the file again in task1209. Of course, this only works when you just need something small. If you need everything, copying is definitely not an option.

Now, you probably think I’m crazy: Copy a file manually and checkin again? Isn’t it the original sin that version control solves?

Remember, I’m trying to give you choices to keep tasks independent, which is desirable. And yes, this time, this technique can help.

You’ll be creating a merge conflict for the second branch (an evil twin as we call it) that you’ll need to solve, but tasks will stay independent.

The sequence will be as follows: task1209 will merge without conflict.

Then, when task1213 tries to merge, a conflict is raised:

If you are doing manual merges to the main branch, all you have to do is solve the conflict and checkin the result. So it won’t be that hard.

But, if you are using a CI system or a mergebot in charge of doing the merges, there won’t be a chance to make manual conflict resolution there, so the task will be rejected.

If that happens, you’ll have to follow what we call a rebase cycle: simply merge down from main to task1213 to solve the problem, and then the branch will merge up without conflicts.

|

Note for Git users

Git rebase is very different from what we call rebase in Plastic. Rebase in Plastic is simply "merge down" or "changing the base." For example, branch |

Checkin often and keep reviewers in mind

Ever heard this? Programs must be written for people to read and only incidentally for machines to execute. It is from the legendary book "Structure and Interpretation of Computer Programs (MIT)."

It is a game-changer. We should write code thinking about people, not computers. That’s the cornerstone of readable code, the key to simple design. It is one of the cornerstones of agile, extreme programming, and other techniques that put code in the center of the picture.

Well, what if you also checkin for people to read? Yes, it might not be easy at first, but it really pays off.

Let’s start with a few anti-patterns that will allow me to explain my point here.

Antipattern 1: Checkin only once

Imagine that you have to do an important bug fix or add a new feature, but you need to clean up some code before that.

This is one way to do it:

Then the reviewer comes, diffs the changeset number 145, finds there are 100 modified files, and… goes for a coffee or lunch, or wants to leave for the day… ouch!

And this is how the traditional way to diff branches provokes context switches, productivity loss, or simply "ok, whatever, let’s approve it."

Antipattern 2: Checkin for yourself

Let’s try again with a different checkin approach:

This time the developer checked in several times during development. It is beneficial for him because he protected his changes to avoid losing them in the event of a weird crash or something. Multiple checkins can also help when you are debugging or doing performance testing. Instead of commenting code out and going back and going crazy, you create real "checkpoints" you know you can go back later safely if you get lost in the way.

But the reviewer will go and simply diff the entire branch because the individual checkins won’t be relevant for him. And the result will be as demotivating as it was in the previous case: 100+ files to review. Ouch!

Checkin for the reviewer

Now, let’s follow the rule we all use: checkin with the reviewer in mind. Every checkin has to help the reviewer follow your train of thought, follow your steps to understand how you tackled the task.

Let’s try again:

Now, as a reviewer, you won’t go and diff the entire branch. Instead, you’ll diff changeset by changeset. And, you’ll be following the pre-recorded explanation the author made to clarify each step of the task. You won’t have to find yourself against a bold list of 100+ modified files. Instead, you’ll go step by step.

First, you see 21 files modified, but the comment says it was just about cleaning up some C# usings. The list of 21 files is not terrifying anymore; it is just about removing some easy stuff. You can quickly glance through the files or even skip some of them.

Then, the next 12 files are just about a method extracted to a new class, and the affected callers having to adapt to the new call format. Not an issue either.

Next comes 51 files, but the comment clearly says it is just because a method was renamed. Your colleague is telling you it is a trivial change, probably done thanks to the IDE refactoring capabilities in just a few seconds.

Then, it comes the actual change, the difficult one. Fortunately, it only affects 2 files. You can still spend quite a lot of time on this, really understanding why the change was done and how it works now. But it is just two files. Nothing to do with the setback produced by the initial vision of 100 files changed.

Finally, the last change is a method that has been removed because it is no longer invoked.

Easier, isn’t it?

Objection: But… you need to be very careful with checkins, that’s a lot of work!

Yes, I hear you. Isn’t it a lot of work to write readable code? Isn’t it easier and faster to just put things together and never look back? It is not, right? The extra work of writing clean code pays off in the mid-term when someone has to touch and modify it. It pays off even if the "other person touching the code" is your future self coming back just three months later.

Well, the same applies to carefully crafted checkins. Sure, they are more complex than "checkpoint" checkins, but they really (really!) pay off.

And, the best thing is that you get used to working this way. Same as writing readable code becomes a part of your way of working once you get used to it, doing checkins with reviewers in mind just becomes natural.

And, since development is a team sport, you’ll benefit from others doing that too, so everything flows nicely.

Task branches turn plans into solid artifacts that developers can touch

This is where everything comes together. As you can see, branches don’t live in isolation. They are not just version control artifacts that live on a different dimension than the project. It is not like branches are for coders and plans, scrums, tasks, and all that stay on a distant galaxy. Task branches close the gap.

Often, developers not yet in branch-per-task tend to think of tasks as some weird abstract artifact separated from the real job. It might sound weird, but I bet you’ve experienced that at some point. When they start creating a branch for each task, tasks become something they touch. Tasks become their reality, not just a place where some crazy pointy-haired boss asked them to do something. Task branches change everything.

Handling objections

But, do you need a task in Jira/whatever for every task? That’s a lot of bureaucracy!

It is a matter of getting used to. And it takes no time, believe me. We have gone through this many times already.

Once you get used to it, you feel naked if you don’t have a task for your work.

Submitting a task will probably be part of some product manager/scrum master/you-name-it most of the time. But even if, as a developer, you have to submit it (we do it very often), remember every minute saved in describing what to do will save you an immense amount of questions, problems and misunderstandings.

I know this is obvious for many teams, and you don’t need to be convinced about how good having an issue tracker for everything is, but I consider it appropriate to enforce the key importance of having tasks in branch per task.

We use an issue tracker for bugs but not new features.

Well, use it for everything. Every change you do in code must have an associated task.

But some new features are big, and they are just not one task and…

Sure, that’s why you need to split them. Probably what you are describing is more a story or even an epic with many associated tasks. I’m just focusing on tasks, the actual units of work: The smallest possible pieces of work that are delivered as a unit.

Well, fine, but this means thousands of branches in version control. That’s unmanageable!

Plastic can handle thousands of branches!

This is the list of branches of our repositories that handle the Plastic source code (sort of recursive, I know 😉):

This was more than 18 thousand branches when I took the screenshot. Plastic doesn’t have issues with that. It is designed for that.

Now, look at the following screenshot: The main repo containing the Plastic code filtered by 2018 (almost the entire year).

You see on the diagram (the upper part with all these lines) the area marked in red in the navigator rendered at the bottom. Yes, all these lines just belong to a tiny fraction of what happened over the year.

So, no, Plastic doesn’t break with tons of branches, nor the tools we provide to browse it.

Ok, Plastic can deal with many branches, but how are we supposed to navigate that mess?

The zoom-out example produces this response very often. It can deal with it, but this is a nightmare!

Well, the answer is simple: You’ll never use that zoom level, same as you won’t set the zoom of your satnav to show all the roads in Europe to find your way around Paris.

This is what I usually see in my Branch Explorer: just the branch I’m working on plus the related ones, the main branch in this case. Super simple.

Task branches as units of change

We like to think of branches as the real units of change. You complete a change in a branch, doing as many checkins as you need, and creating several changesets. But the complete change is the branch itself.

Maybe you are used to thinking of changes in different terms. In fact, the following is very common:

This is a pattern we call changeset per task, and it was prevalent in the old SVN days. Every single changeset is a unity of change with this approach. The problem, as you understand, is that this way, you just use the version control as a mere delivery mechanism. You are done, you checkin, but you can’t checkin for the reviewer, narrating a full story, explaining changes one by one, isolating dangerous or difficult changes in a specific changeset, and so on.

Instead, we see the branch as the unit of change. You can treat it as a single unit, or look inside it to discover its own evolution.

What happens when a task can’t be merged automatically?

The purpose of task branches with full CI automation is to automatically test, merge, and deploy tasks when they are complete. The idea is that the integration branch, typically the main branch, is protected so nothing except the CI system or the mergebot driving the process can touch it.

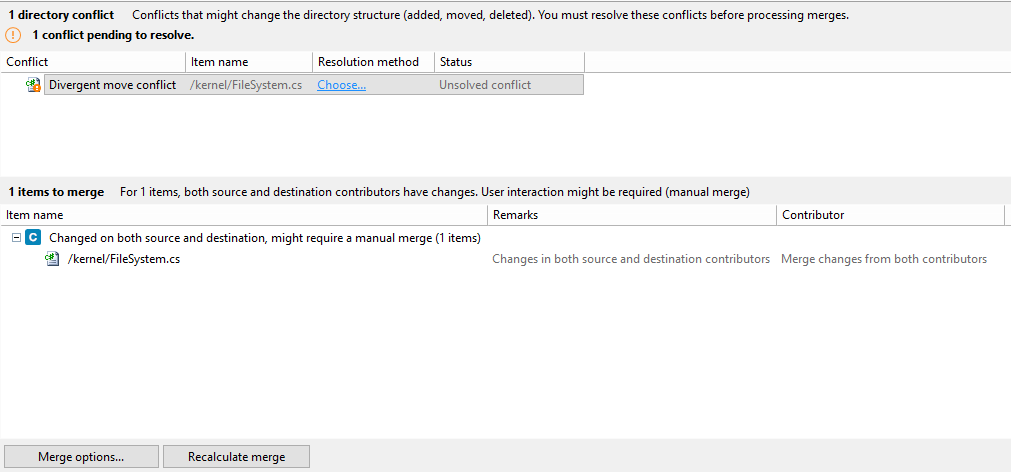

So, what happens when a merge conflict can’t be solved automatically?

Consider the following scenario where a couple of developers —Manu and Sara— work on two branches. Suppose at a certain point Manu marks his branch as done.

The CI system monitoring the repo, or even better, the Plastic mergebot driving the process, will take task1213, merge it to the main branch in a temporary shelf, ask the CI to build and test the result, and if everything goes fine, confirm the merge.

The mergebot will notify the developer (and optionally the entire team) that a new branch has been merged and a new version labeled. Depending on your project, the new version BL102 may also be deployed to production.

Now Sara continues working on task1209 and finally marks it as complete, letting the mergebot know that it is ready to be merged and tested.

But unfortunately, the changes made by Manu, which were already merged to main to create BL102, collide with the ones made by Sara, and the merge requires manual intervention.

The merge process is driven by the CI system or the mergebot, but neither can solve manual conflicts.

So what is the way to proceed?

Sara needs to run a merge from the main branch to her branch to solve the conflicts and make her branch ready to be automatically merged by the mergebot or CI.